Artificial Intelligence and Ethics in EU-funded Projects

Artificial Intelligence is the aim or the enabler in many ongoing EU-funded projects and upcoming Calls for proposals. On the EU level, Artificial Intelligence (AI) is perceived as the key element of economic growth and competitiveness. AI is one of the most important applications of the digital economy based on the processing of data. At the same time, the human and ethical implications of AI and its impact on various research areas are being highlighted.

For this reason, the issue of Artificial Intelligence and Ethics is addressed on different levels within the EU – from policy, over legal and regulatory to research and development.

Artificial Intelligence and Ethics: a policy framework

The cornerstone document outlining the EU’s approach to Artificial Intelligence and Ethics is the European Commission’s White Paper On Artificial Intelligence – A European approach to excellence and trust. This document presents “policy options to enable a trustworthy and secure development of AI in Europe, in full respect of the values and rights of EU citizens”.

The White paper builds on top of two main aspects:

- the policy framework

- the key elements for a future regulatory framework

The first element focuses on the “ecosystem of excellence”, trying to capitalize on potential benefits of AI, across all sectors and industries. This part addresses the research an industrial infrastructure in EU and engages the potential of its computing infrastructure (e.g. high-performance computers). The document clearly states: “the centres and the networks should concentrate in sectors where Europe has the potential to become a global champion such as industry, health, transport, finance, agrifood value chains, energy/environment, forestry, earth observation and space.”

Artificial Intelligence and Ethics: a regulatory approach

During the first stage of the CYRENE project we are defining a scenario where the CYRENE CA process can be tested and demonstrated. This scenario is focused on the Vehicle Transport Service (VTS) SCS from Italy to Spain, which involves business partners belonging to different industries and sectors (e.g. automotive industry, transportation, government, etc.).

During the design phase, two different perspectives have been analysed. On the one hand, the business view, including all the SCS processes of the VTS and the business partners interacting to fulfill these processes. On the other hand, the technical view, the operating assets and systems that are involved and participate in the operation and provision of the SCS. This scenario will be deployed and demonstrated during the last stage of the CYRENE project.

Supply Chain disruptions

The second element, the future regulatory framework addresses the potential risks AI may present and aims to propose adequate safeguards. To ensure the proper balance between Artificial Intelligence and Ethics, the Commission created a discussion platform organized around the High-level expert group on artificial intelligence.

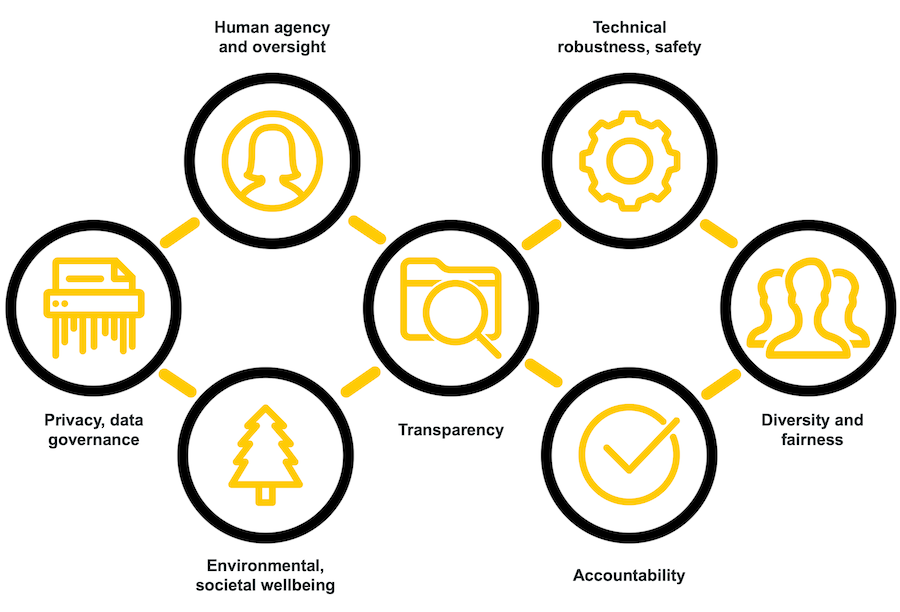

The Group already identified several key requirements concerning AI:

- Human agency and oversight,

- Technical robustness and safety,

- Privacy and data governance,

- Transparency,

- Diversity, non-discrimination and fairness,

- Societal and environmental wellbeing, and

- Accountability

While non-binding, these requirements will influence the ongoing discussion on the potential regulation in this area – Proposal for a Regulation laying down harmonised rules on artificial intelligence.

When it comes to the regulatory framework, the EC’s White Paper outlines potential harms the AI may bring: “This harm might be both material (safety and health of individuals, including loss of life, damage to property) and immaterial (loss of privacy, limitations to the right of freedom of expression, human dignity, discrimination for instance in access to employment), and can relate to a wide variety of risks. A regulatory framework should concentrate on how to minimise the various risks of potential harm, in particular the most significant ones.”

Having in mind the speed with which the AI is being developed and its potential implications, it will be necessary to integrate flexibility into the regulatory framework to ensure its future-proofing. While the Proposal for a Regulation laying down harmonised rules on artificial intelligence must be clear enough to be applicable in real life, it must also satisfy the need for adaptability to future solutions.

Addressing Artificial Intelligence and Ethics issues from an ethics perspective

The EU policies and priorities are being translated into Calls for proposals for what later become EU-funded projects. The EC’s approach to artificial intelligence and ethics incorporates elements aiming to:

- Enable conditions for AI’s development and uptake ensures commercialisation and go-to-market of AI-related research

- Foster digital skills and promoting a human-centric approach to AI

- Build strategic leadership in high-impact sectors

In this context, targeting AI in its research framework programmes serves the purpose of coordinating investments and maximising research outputs of programmes such as Digital Europe and Horizon Europe. This, however, includes the necessity of ensuring ethics compliance concerning the use of AI in research projects.

To provide guidance on the Artificial Intelligence and Ethics in EU projects the EC published “Ethics guidelines for trustworthy AI” – a document that is now almost systematically being referenced during the ethics evaluations and is almost always being mentioned as a recommendation for the applicants/consortia.

Since the AI can be used, in breach of EU privacy and data protection rules to, for example, de-anonymise data about individuals or to raise potential risks of mass surveillance by analysing vast quantities of personal data and identifying links among them, it is relevant to mention here the EC’s Guidance Note on “Ethics and Data Protection”.

Finally, for the AI context, in rare cases, the ethics evaluators and consortia or applicants fulfilling ethics requirements must also consider the AI from the military/civil application viewpoint. Two main documents are of interest in this case: Guidance note “Research with an exclusive focus on civil applications” and Guidance note “Research involving dual-use items”.

Certifying the security and resilience of supply chain services

The CYRENE project aims to promote trust and confidence of the European consumers, providers and suppliers by enhancing the security, privacy, resilience, accountability and trustworthiness of Supply Chains. One of the main impacts the project will achieve is to pave the way for a competitive and trustworthy Digital Single Market.

CYRENE aims to create certification schemes to support the security and resilience of SCs through the following schemes:

- Security Certification Scheme for Supply Chain (e.g. risk assessment tool and process);

- ICT Security Certification Scheme for ICT-based or ICT-interconnected Supply Chain;

- ICT Security Certification Scheme for SCs’ (e.g. Maritime, Transport or Manufacturing) IoT devices and ICT systems that should differ from traditional IoT and systems as more stress should be put on data protection and privacy issues.

Engaging AI, Information Mining and Deep Learning in security and privacy risk evaluation facilitate the detection and analysis of new, sophisticated and advanced persistent threats, the handling of complex cybersecurity incidents and data breaches and the sharing of security-related information.

In this context, CYRENE introduces AI and machine learning techniques to allow the systems and services to monitor a wider number of factors towards identifying patterns of abnormal activity. It relies on two main components: the artificial intelligence (AI) engine, and the threat intelligence. The AI engine produces optimal learning models for threat detection and enables efficient model update. Threat intelligence, on the other hand, offers real-time cyber threat monitoring and intrusion detection.

From an ethics perspective, the use of AI within CYRENE does not require close monitoring. Its purpose is not necessarily the processing of personal data, so the risks it presents for the rights of individuals are quite limited. The project focuses on more technical aspects of cyber threat monitoring and privacy assurance (the how) rather than on the information including personal data (the what) that may be put at risk due to a potential security incident.

Nevertheless, because the requirements that AI systems must meet in order to be deemed trustworthy remain applicable, Privanova as the ethics and legal lead of the project remain vigilant with this regard. Together with the Project Coordinator and relevant partners, we consider accountability as the key principle in the context of CYRENE. This, in particular concerns the auditability of AI systems. This aspect “enables the assessment of algorithms, data and design processes plays a key role therein, especially in critical applications.”

Have you already heard of the initiatives taken by the EU? What is your view on this sensitive subject?

Reach out to us and share your views either by using our contact form or by following our social media accounts in Twitter and LinkedIn. Don’t forget to subscribe to our Newsletter for regular updates!

Signed by: The Privanova team. See the full article here.

KEY FACTS

Project Coordinator: Sofoklis Efremidis

Institution: Maggioli SPA

Email: info{at}cyrene.eu

Start: 1-10-2020

Duration: 36 months

Participating organisations: 14

Number of countries: 10

FUNDING

This project has received funding from the European Union’s Horizon 2020 Research and Innovation program under grant agreement No 952690. The website reflects only the view of the author(s) and the Commission is not responsible for any use that may be made of the information it contains.

This project has received funding from the European Union’s Horizon 2020 Research and Innovation program under grant agreement No 952690. The website reflects only the view of the author(s) and the Commission is not responsible for any use that may be made of the information it contains.